Michigan State University researchers have discovered how to utilize vibrations, usually an obstacle in quantum computing, as a tool to stabilize quantum states. Their research provides insights into controlling environmental factors in quantum systems and has implications for the advancement of quantum technology.When quantum systems, such as those used in quantum computers, operate in the real world, they can lose information to mechanical vibrations.

New research led by Michigan State University, however, shows that a better understanding of the coupling between the quantum system and these vibrations can be used to mitigate loss.

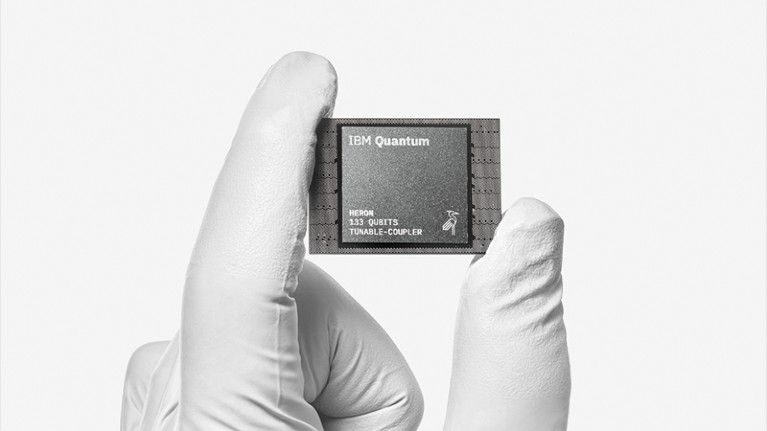

The research, published in the journal Nature Communications, could help improve the design of quantum computers that companies such as IBM and Google are currently developing.

The Challenge of Isolation in Quantum ComputingNothing exists in a vacuum, but physicists often wish this weren’t the case. Because if the systems that scientists study could be completely isolated from the outside world, things would be a lot easier.Take quantum computing. It’s a field that’s already drawing billions of dollars in support from tech investors and industry heavyweights including IBM, Google, and Microsoft. But if the tiniest vibrations creep in from the outside world, they can cause a quantum system to lose information.For instance, even light can cause information leaks if it has enough energy to jiggle the atoms within a quantum processor chip.The Problem of Vibrations“Everyone is really excited about building quantum computers to answer really hard and important questions,” said Joe Kitzman, a doctoral student at Michigan State University. “But vibrational excitations can really mess up a quantum processor.”However, with new research published in the journal Nature Communications, Kitzman and his colleagues are showing that these vibrations need not be a hindrance. In fact, they could benefit quantum technology. READ MORE...