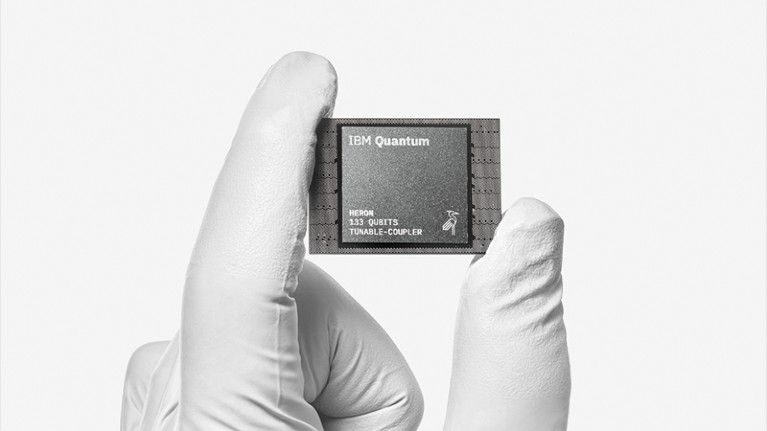

One of IBM’s latest quantum processor has improved the reliability of its qubits. Credit: Ryan Lavine for IBM

IBM has unveiled the first quantum computer with more than 1,000 qubits — the equivalent of the digital bits in an ordinary computer. But the company says it will now shift gears and focus on making its machines more error-resistant rather than larger.

For years, IBM has been following a quantum-computing road map that roughly doubled the number of qubits every year. The chip unveiled on 4 December, called Condor, has 1,121 superconducting qubits arranged in a honeycomb pattern. It follows on from its other record-setting, bird-named machines, including a 127-qubit chip in 2021 and a 433-qubit one last year.

Quantum computers promise to perform certain computations that are beyond the reach of classical computers. They will do so by exploiting uniquely quantum phenomena such as entanglement and superposition, which allow multiple qubits to exist in multiple collective states at once. READ MORE...

Showing posts with label Quantum Computer. Show all posts

Showing posts with label Quantum Computer. Show all posts

Wednesday, December 6

More Than 1,000 Qubits

Friday, May 27

The Revolutionary World of Quantum Computers

The inside of an IBM System One quantum computer. Bryan Walsh/Vox

A few weeks ago, I woke up unusually early in the morning in Brooklyn, got in my car, and headed up the Hudson River to the small Westchester County community of Yorktown Heights. There, amid the rolling hills and old farmhouses, sits the Thomas J. Watson Research Center, the Eero Saarinen-designed, 1960s Jet Age-era headquarters for IBM Research.

Deep inside that building, through endless corridors and security gates guarded by iris scanners, is where the company’s scientists are hard at work developing what IBM director of research Dario Gil told me is “the next branch of computing”: quantum computers.

I was at the Watson Center to preview IBM’s updated technical roadmap for achieving large-scale, practical quantum computing. This involved a great deal of talk about “qubit count,” “quantum coherence,” “error mitigation,” “software orchestration” and other topics you’d need to be an electrical engineer with a background in computer science and a familiarity with quantum mechanics to fully follow.

I am not any of those things, but I have watched the quantum computing space long enough to know that the work being done here by IBM researchers — along with their competitors at companies like Google and Microsoft, along with countless startups around the world — stands to drive the next great leap in computing. Which, given that computing is a “horizontal technology that touches everything,” as Gil told me, will have major implications for progress in everything from cybersecurity to artificial intelligence to designing better batteries.

Provided, of course, they can actually make these things work.

Entering the quantum realm

The best way to understand a quantum computer — short of setting aside several years for grad school at MIT or Caltech — is to compare it to the kind of machine I’m typing this piece on: a classical computer.

My MacBook Air runs on an M1 chip, which is packed with 16 billion transistors. Each of those transistors can represent either the “1” or “0” of binary information at a single time — a bit. The sheer number of transistors is what gives the machine its computing power.

Sixteen billion transistors packed onto a 120.5 sq. mm chip is a lot — TRADIC, the first transistorized computer, had fewer than 800. The semiconductor industry’s ability to engineer ever more transistors onto a chip, a trend forecast by Intel co-founder Gordon Moore in the law that bears his name, is what has made possible the exponential growth of computing power, which in turn has made possible pretty much everything else. READ MORE...

The best way to understand a quantum computer — short of setting aside several years for grad school at MIT or Caltech — is to compare it to the kind of machine I’m typing this piece on: a classical computer.

My MacBook Air runs on an M1 chip, which is packed with 16 billion transistors. Each of those transistors can represent either the “1” or “0” of binary information at a single time — a bit. The sheer number of transistors is what gives the machine its computing power.

Sixteen billion transistors packed onto a 120.5 sq. mm chip is a lot — TRADIC, the first transistorized computer, had fewer than 800. The semiconductor industry’s ability to engineer ever more transistors onto a chip, a trend forecast by Intel co-founder Gordon Moore in the law that bears his name, is what has made possible the exponential growth of computing power, which in turn has made possible pretty much everything else. READ MORE...

Tuesday, March 15

Atom by Atom

Quantum computers could be constructed cheaply and reliably using a new technique perfected by a University of Melbourne-led team that embeds single atoms in silicon wafers, one-by-one, mirroring methods used to build conventional devices, in a process outlined in an Advanced Materials paper.

The new technique – developed by Professor David Jamieson and co-authors from UNSW Sydney, Helmholtz-Zentrum Dresden-Rossendorf (HZDR), Leibniz Institute of Surface Engineering (IOM), and RMIT – can create large scale patterns of counted atoms that are controlled so their quantum states can be manipulated, coupled and read-out.

Lead author of the paper, Professor Jamieson said his team’s vision was to use this technique to build a very, very large-scale quantum device.

“We believe we ultimately could make large-scale machines based on single-atom quantum bits by using our method and taking advantage of the manufacturing techniques that the semiconductor industry has perfected,” Professor Jamieson said.

The technique takes advantage of the precision of the atomic force microscope, which has a sharp cantilever that “touches” the surface of a chip with a positioning accuracy of just half a nanometre, about the same as the spacing between atoms in a silicon crystal.

The team drilled a tiny hole in this cantilever, so that when it was showered with phosphorus atoms one would occasionally drop through the hole and embed in the silicon substrate.

The key was knowing precisely when one atom – and no more than one – had become embedded in the substrate. Then the cantilever could move to the next precise position on the array.

The team discovered that the kinetic energy of the atom as it plows into the silicon crystal and dissipates its energy by friction can be exploited to make a tiny electronic “click.” READ MORE...

The new technique – developed by Professor David Jamieson and co-authors from UNSW Sydney, Helmholtz-Zentrum Dresden-Rossendorf (HZDR), Leibniz Institute of Surface Engineering (IOM), and RMIT – can create large scale patterns of counted atoms that are controlled so their quantum states can be manipulated, coupled and read-out.

Lead author of the paper, Professor Jamieson said his team’s vision was to use this technique to build a very, very large-scale quantum device.

“We believe we ultimately could make large-scale machines based on single-atom quantum bits by using our method and taking advantage of the manufacturing techniques that the semiconductor industry has perfected,” Professor Jamieson said.

The technique takes advantage of the precision of the atomic force microscope, which has a sharp cantilever that “touches” the surface of a chip with a positioning accuracy of just half a nanometre, about the same as the spacing between atoms in a silicon crystal.

The team drilled a tiny hole in this cantilever, so that when it was showered with phosphorus atoms one would occasionally drop through the hole and embed in the silicon substrate.

The key was knowing precisely when one atom – and no more than one – had become embedded in the substrate. Then the cantilever could move to the next precise position on the array.

The team discovered that the kinetic energy of the atom as it plows into the silicon crystal and dissipates its energy by friction can be exploited to make a tiny electronic “click.” READ MORE...

Wednesday, February 23

Quantum Computer Mind

Quick: what’s 4 + 5? Nine right? Slightly less quick: what’s five plus four? Still nine, right?

Okay, let’s wait a few seconds. Bear with me. Feel free to have a quick stretch.

Now, without looking, what was the answer to the first question?

It’s still nine, isn’t it?

You’ve just performed a series of advanced brain functions. You did math based on prompts designed to appeal to entirely different parts of your brain and you displayed the ability to recall previous information when queried later. Great job!

This might seem like old hat to most of us, but it’s actually quite an amazing feat of brain power.

And, based on some recent research by a pair of teams from the University of Bonn and the University of Tübingen, these simple processes could indicate that you’re a quantum computer.

Let’s do the math

Your brain probably isn’t wired for numbers. It’s great at math, but numbers are a relatively new concept for humans.

Numbers showed up in human history approximately 6,000 years ago with the Mesopotamians, but our species has been around for about 300,000 years.

Prehistoric humans still had things to count. They didn’t randomly forget how many children they had just because there wasn’t a bespoke language for numerals yet.

Instead, they found other methods for expressing quantities or tracking objects such as holding up their fingers or using representative models.

If you had to keep track of dozens of cave-mates, for example, you might carry a pebble to represent each one. As people trickled in from a hard day of hunting, gathering, and whatnot, you could shift the pebbles from one container to another as an accounting method.

It might seem sub-optimal, but the human brain really doesn’t care whether you use numbers, words, or concepts when it comes to math.

Let’s do the research

The aforementioned research teams recently published a fascinating paper titled “Neuronal codes for arithmetic rule processing in the human brain.”

As the title intimates, the researchers identified an abstract code for processing addition and subtraction inside the human brain. This is significant because we really don’t know how the brain handles math.

You can’t just slap some electrodes on someone’s scalp or stick them in a CAT scan machine to suss out the nature of human calculation.

Math happens at the individual neuron level inside the human brain. EKG readings and CAT scans can only provide a general picture of all the noise our neurons produce.

And, as there are some 86 billion neurons making noise inside our heads, those kinds of readings aren’t what you’d call an “exact science.”

The Bonn and Tübingen teams got around this problem by conducting their research on volunteers who already had subcranial electrode implants for the treatment of epilepsy.

Nine volunteers met the study’s criteria and, because of the nature of their implants, they were able to provide what might be the world’s first glimpse into how the brain actually handles math. READ MORE...

The aforementioned research teams recently published a fascinating paper titled “Neuronal codes for arithmetic rule processing in the human brain.”

As the title intimates, the researchers identified an abstract code for processing addition and subtraction inside the human brain. This is significant because we really don’t know how the brain handles math.

You can’t just slap some electrodes on someone’s scalp or stick them in a CAT scan machine to suss out the nature of human calculation.

Math happens at the individual neuron level inside the human brain. EKG readings and CAT scans can only provide a general picture of all the noise our neurons produce.

And, as there are some 86 billion neurons making noise inside our heads, those kinds of readings aren’t what you’d call an “exact science.”

The Bonn and Tübingen teams got around this problem by conducting their research on volunteers who already had subcranial electrode implants for the treatment of epilepsy.

Nine volunteers met the study’s criteria and, because of the nature of their implants, they were able to provide what might be the world’s first glimpse into how the brain actually handles math. READ MORE...

Monday, August 2

Time Crystal

Google’s quantum computer has been used to build a “time crystal” according to freshly-published research, a new phase of matter that upends the traditional laws of thermodynamics.

Despite what the name might suggest, however, the new breakthrough won’t let Google build a time machine.

Time crystals were first proposed in 2012, as systems that continuously operate out of equilibrium. Unlike other phases of matter, which are in thermal equilibrium, time crystals are stable yet the atoms which make them up are constantly evolving.

At least, that’s been the theory: scientists have disagreed on whether such a thing was actually possible in reality. Different levels of time crystals that could or could not be generated have been argued, with demonstrations of some that partly – but not completely – meet all the relevant criteria.

Time crystals were first proposed in 2012, as systems that continuously operate out of equilibrium. Unlike other phases of matter, which are in thermal equilibrium, time crystals are stable yet the atoms which make them up are constantly evolving.

At least, that’s been the theory: scientists have disagreed on whether such a thing was actually possible in reality. Different levels of time crystals that could or could not be generated have been argued, with demonstrations of some that partly – but not completely – meet all the relevant criteria.

In a new research preprint by researchers at Google, along with physicists at Princeton, Stanford, and other universities, it’s claimed that Google’s quantum computer project has delivered what many believed impossible.

Preprints are versions of academic papers that are published prior to going through peer-review and full publishing; as such, their findings can be challenged or even overturned completely during that review process. READ MORE

Subscribe to:

Comments (Atom)