Liquid circuits that mimic synapses in the brain can for the first time perform the kind of logical operations underlying modern computers, a new study finds.

Near-term applications for these devices may include tasks such as image recognition, as well as the kinds of calculations underlying most artificial intelligence systems.

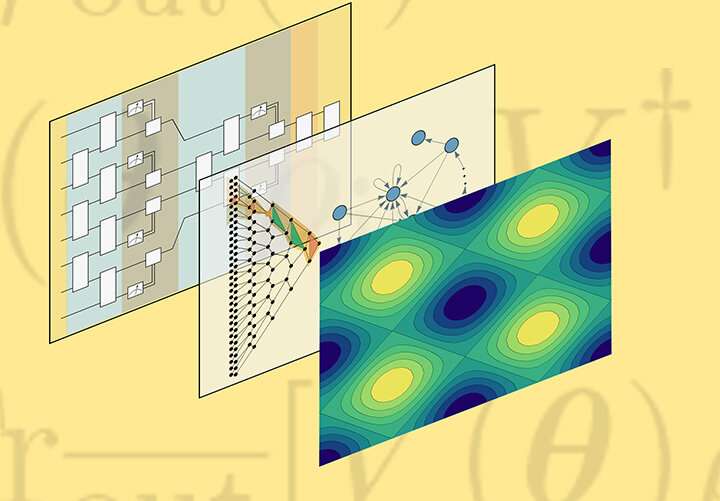

Just as biological neurons both compute and store data, brain-imitating neuromorphic technology often combines both operations. These devices may greatly reduce the energy and time lost in conventional microchips shuttling data back and forth between processors and memory.

Just as biological neurons both compute and store data, brain-imitating neuromorphic technology often combines both operations. These devices may greatly reduce the energy and time lost in conventional microchips shuttling data back and forth between processors and memory.

They may also prove ideal for implementing neural networks—AI systems increasingly finding use in applications such as analyzing medical scans and controlling autonomous vehicles. READ MORE...